Abstract

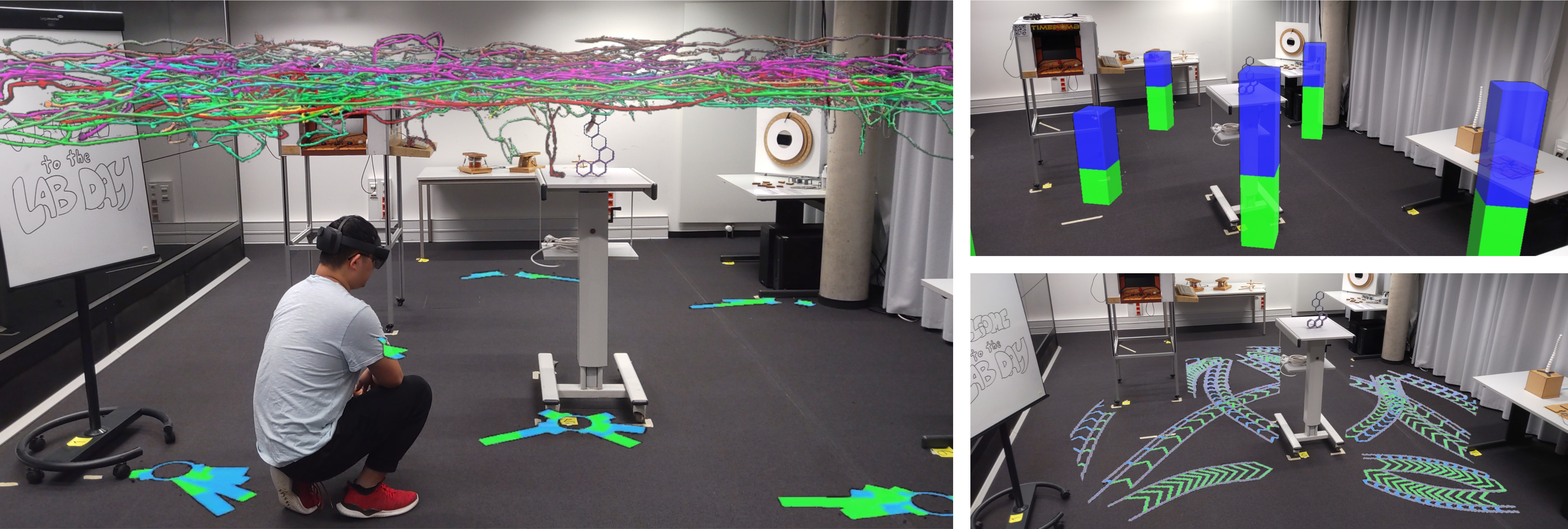

This paper presents Pearl, a mixed-reality approach to the analysis of human movement data in situ. As the physical environment shapes human motion and behavior, the analysis of such motion can benefit from the direct inclusion of the environment in the analytical process. We present methods for exploring movement data in relation to surrounding regions of interest, such as objects, furniture, and architectural elements. We introduce concepts for selecting and filtering data through direct interaction with the environment, and a suite of visualizations for revealing aggregated and emergent spatial and temporal relations. More sophisticated analysis is supported through complex queries comprising multiple regions of interest. To illustrate the potential of Pearl, we develop an Augmented Reality-based prototype and conduct expert review sessions and scenario walkthroughs in a simulated exhibition. Our contribution lays the foundation for leveraging the physical environment in the in-situ analysis of movement data.

Media: Videos, Slides, and Supplemental Material

30-Second Video Preview

10-min Recorded Talk

5-min Video Figure

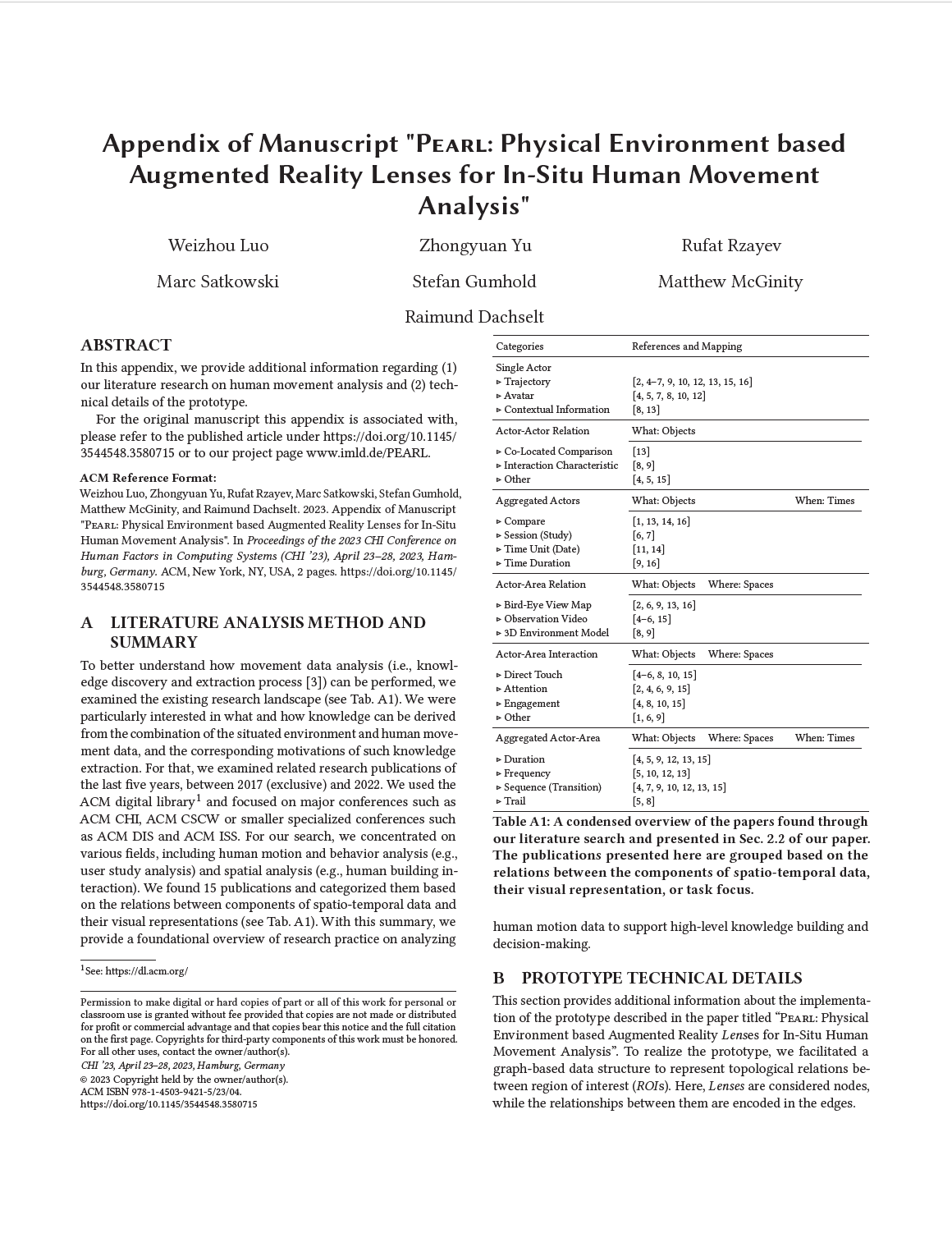

Supplemental Material

Moreover, we offer recorded human movement data based on our demo room. You can find a detailed README.md within the folder that highlights the files and folders.

We also provide all materials related to the expert review study. This includes 1) a pre-study questionnaire 2) PEARL system introduction slides 3) a guided walkthrough script 4) Envisioning scenario feedback session reading materials 5) Post-Interview questions. You can find a description of our study procedure in Sec. 6.3 of our paper.

Additionally, transcribed expert review study comments are offered. Each session is roughly grouped by the blocks present in the semi-structured interview block.

Lastly, PEARL prototype is openly available on GitHub.

Related Publication

@inproceedings{luo2023pearl,

author = {Weizhou Luo and Zhongyuan Yu and Rufat Rzayev and Marc Satkowski and Stefan Gumhold and Matthew McGinity and Raimund Dachselt},

title = {PEARL: Physical Environment based Augmented Reality Lenses for In-Situ Human Movement Analysis},

booktitle = {Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems},

series = {CHI '23},

number = {381},

year = {2023},

month = {04},

isbn = {9781450394215},

location = {Hamburg, Germany},

numpages = {15},

doi = {10.1145/3544548.3580715},

url = {https://doi.org/10.1145/3544548.3580715},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

keywords = {Immersive Analytics, physical referents, augmented/mixed reality, affordance, In-situ visualization, movement data analysis}

}List of additional material

30-Second Video Preview, 5-min Video Figure, 10-min Recorded Talk, Appendix, GitHub Repository

Acknowledgments

We thank Wolfgang Büschel and Annett Mitschick for their support in this paper, our participants in the study, and the anonymous reviewers for their constructive feedback and suggestions. This work was supported by the German Research Foundation (DFG, Deutsche Forschungsgemeinschaft) under Germany’s Excellence Strategy – EXC-2068 – 390729961 – Cluster of Excellence “Physics of Life” and EXC 2050/1 – Project ID 390696704 – Cluster of Excellence “Centre for Tactile Internet with Human-in-the-Loop” (CeTI) of TU Dresden, DFG grant 389792660 as part of TRR 248 (see https://perspicuous-computing.science), and by the German Federal Ministry of Education and Research (BMBF, SCADS22B) and the Saxon State Ministry for Science, Culture and Tourism (SMWK) by funding the competence center for Big Data and AI “ScaDS.AI Dresden/Leipzig“.